Streamlines integration with 100+ language models via Python SDK.

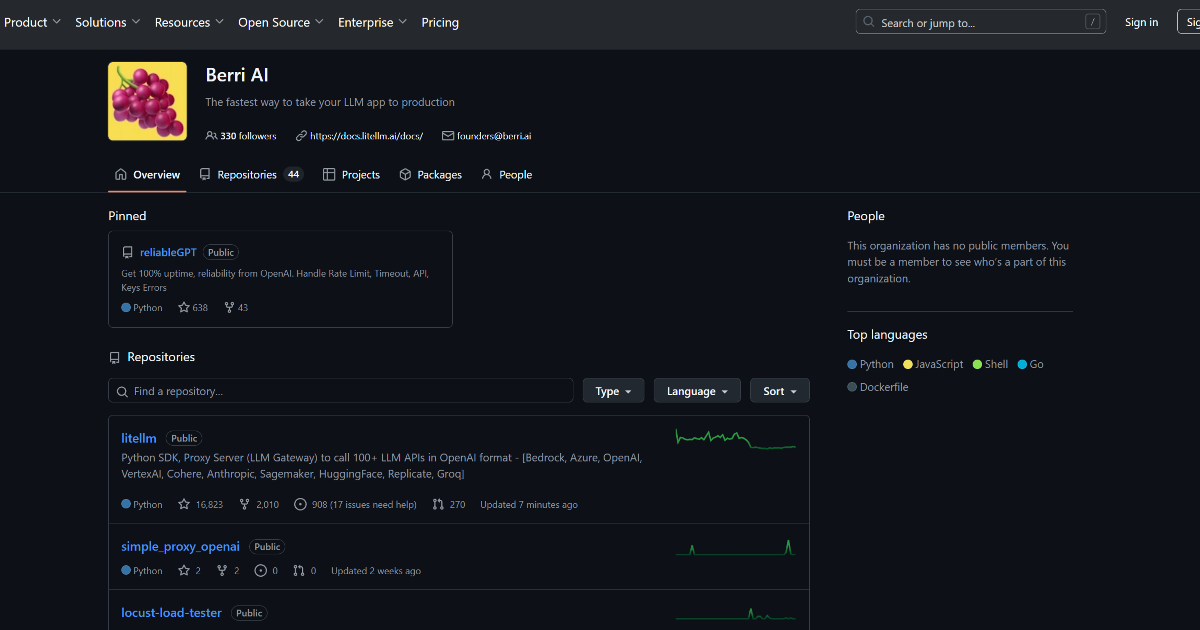

BerriAI-litellm revolutionizes the interaction with large language models (LLMs) by providing a Python SDK and Proxy Server, designed to seamlessly integrate with over 100 LLM APIs in the OpenAI format. This innovative tool is tailored for developers and enterprises seeking to streamline the process of calling diverse LLM APIs such as Bedrock, Azure, OpenAI, VertexAI, and more. By simplifying the integration and management of multiple LLMs, BerriAI-litellm addresses the complexities associated with managing and translating calls between different AI platforms. Key features include comprehensive LLM integration, consistent output format, retry and fallback logic, and budget and rate limiting. It is ideal for software developers, AI researchers, tech enterprises, and data scientists, making it a versatile solution for various AI needs.

Free tier available with limited features.

Free tier available

License: MIT