Anthropic's Political Bias Assessment Tool: Evaluating Fairness in AI Systems

Anthropic has released a new tool designed to measure and assess political bias in AI models. Learn how practitioners can use this framework to evaluate fairness, understand its capabilities, and integrate bias testing into their AI workflows.

A New Standard for AI Fairness Assessment

Anthropic has launched a dedicated tool for measuring political bias in artificial intelligence systems, addressing a critical gap in AI governance and evaluation. As organizations increasingly deploy large language models across sensitive domains—from content moderation to policy analysis—the ability to systematically assess and quantify political bias has become essential. This tool provides practitioners with a structured framework to evaluate whether their AI systems exhibit partisan tendencies or favor particular political perspectives.

Why Political Bias Measurement Matters

Political bias in AI systems can manifest in subtle but consequential ways. Models may inadvertently favor certain policy positions, use loaded language when discussing political topics, or generate responses that reflect the political leanings of their training data. For enterprises deploying AI in public-facing applications, government services, or research contexts, understanding these biases isn't optional—it's a compliance and ethical imperative.

Anthropic's tool addresses this by providing practitioners with:

- Quantifiable bias metrics that move beyond subjective assessments

- Standardized evaluation frameworks for consistent testing across models

- Detailed reporting to identify specific areas where bias emerges

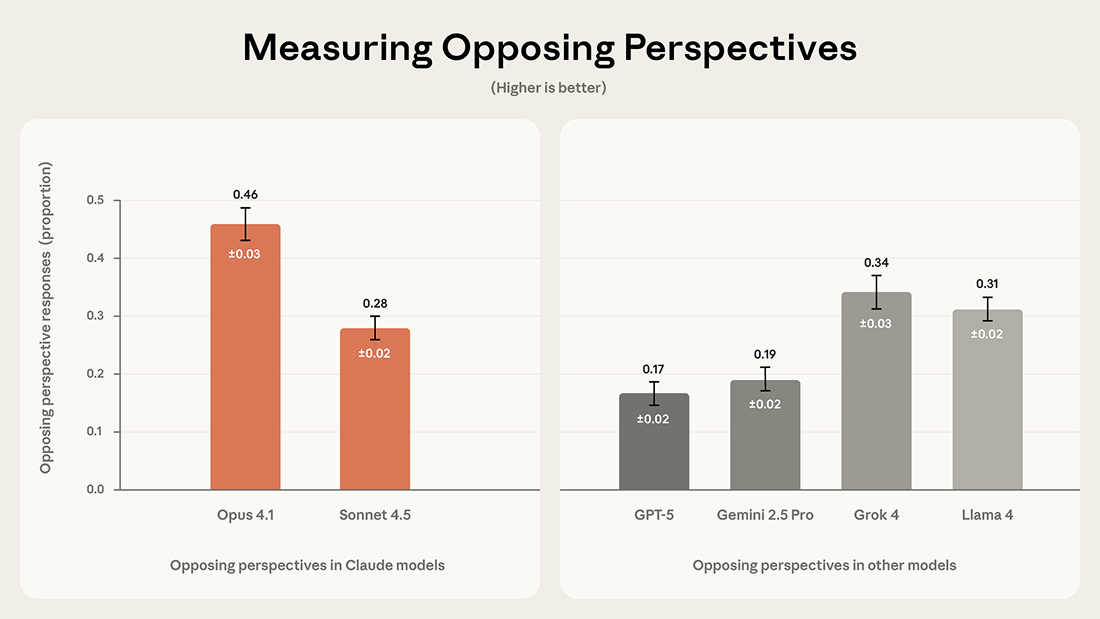

- Benchmarking capabilities to compare performance across different AI systems

How the Tool Works

The assessment framework evaluates AI responses across a range of political topics and scenarios, measuring whether outputs exhibit systematic favoritism toward particular ideologies or policy positions. Rather than flagging individual responses as "biased" or "neutral," the tool generates statistical profiles showing patterns in how models handle politically sensitive content.

Practitioners can use the tool to:

- Baseline their models before deployment to understand existing bias patterns

- Monitor changes as models are updated or fine-tuned

- Compare alternatives when selecting between different AI systems

- Document compliance efforts for regulatory and stakeholder reporting

Integration and Onboarding

The tool is designed with practitioner workflows in mind. Teams can integrate it into existing evaluation pipelines, whether they're using Anthropic's Claude models or assessing third-party systems. The onboarding process focuses on defining evaluation parameters relevant to specific use cases—a content moderation team will configure different tests than a policy research organization.

Documentation includes templates for common scenarios, making it straightforward for teams without specialized bias-testing expertise to get started. The framework also supports custom evaluation criteria, allowing organizations to define what "balanced" means within their particular context.

Pricing and Accessibility

Anthropic has positioned the tool to be accessible to organizations of varying sizes. While specific pricing tiers weren't detailed in initial announcements, the approach emphasizes making bias assessment a standard practice rather than a premium feature. This democratization of bias testing reflects growing industry recognition that fairness evaluation should be routine, not exceptional.

Integration with Existing Workflows

The tool integrates with common AI evaluation platforms and can be incorporated into continuous integration/continuous deployment (CI/CD) pipelines. Teams already using Anthropic's models benefit from native integration, while the framework's design allows compatibility with other major LLM providers.

Looking Forward

As AI systems become more embedded in consequential decision-making, tools that quantify and surface bias will become table stakes for responsible deployment. Anthropic's release signals that bias assessment is moving from academic research into operational practice.

For practitioners, the immediate value lies in having a standardized, documented approach to measuring political bias—replacing ad-hoc assessments with reproducible evaluation. As regulatory frameworks around AI governance continue to evolve, organizations that have already mapped their models' bias profiles will be better positioned to demonstrate due diligence.

Key Sources

- Anthropic's documentation on measuring political bias in Claude models

- Industry research on quantifying bias in large language models

- Best practices from organizations implementing bias assessment frameworks