Cisco's Silicon One P200: Revolutionizing AI Data Center Connectivity

Cisco's Silicon One P200 chip redefines AI data center connectivity with 51.2 Tbps capacity, deep buffers, and unmatched flexibility, challenging industry giants.

Cisco's Silicon One P200: Revolutionizing AI Data Center Connectivity

Cisco Systems has unveiled the Silicon One P200, a groundbreaking networking silicon designed to connect AI data centers over vast distances. This launch positions Cisco against industry giants like Broadcom and Nvidia, aiming to redefine the backbone of next-generation AI infrastructure.

Unmatched Routing Capacity

The Silicon One P200 boasts a remarkable 51.2 terabits per second (Tbps) of routing capacity in a single device. This leap in bandwidth and efficiency is set to transform how global enterprises manage distributed AI workloads.

Addressing AI Networking Challenges

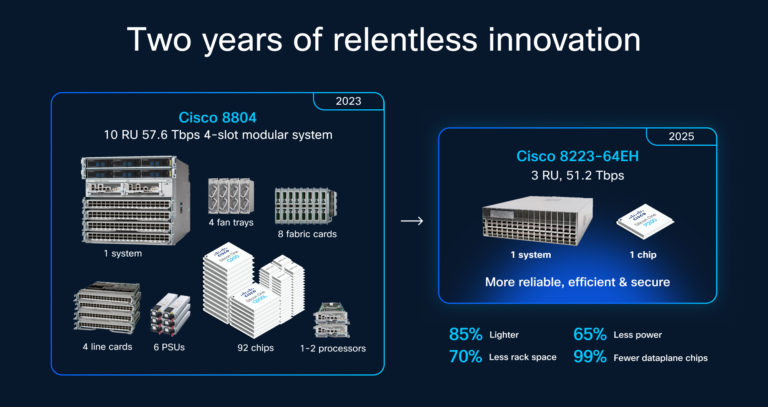

As AI models grow, requiring tens of thousands of GPUs, traditional single data center approaches become impractical. The need for scale-across architectures—networks spanning multiple data centers—has emerged. Cisco’s solution, available in platforms like the Cisco 8223, addresses these challenges by providing seamless interconnectivity without compromising speed, security, or reliability.

Key Features of the Silicon One P200

- 51.2 Tbps Routing Capacity: The industry’s first standalone 51.2 Tbps routing chip, replacing multiple systems and offering power savings.

- Deep Buffers and Advanced Congestion Control: Combines deep buffers and real-time congestion management, crucial for AI workloads.

- Ultimate Deployment Flexibility: Deployable in fixed, modular, or disaggregated chassis, offering unmatched architectural flexibility.

- Power Efficiency: Consolidates functions into a single chip, reducing energy consumption.

- Security and Programmability: Built-in security features and real-time programmability ensure network resilience and adaptability.

Industry Impact

Cisco's entry into the AI networking space intensifies competition with Broadcom and Nvidia. The Cisco 8223 router, powered by the P200, supports over 500 Tbps of capacity, enabling comprehensive connectivity essential for AI load sharing.

Context and Implications

The launch of the Silicon One P200 is timely, as enterprises and cloud providers invest heavily in AI infrastructure. The chip’s performance, efficiency, and flexibility are crucial for innovation and competition.

Benefits for Operators

- Reduced Total Cost of Ownership: Hardware consolidation and lower power consumption lead to significant savings.

- Simplified Network Operations: A unified architecture reduces complexity.

- Future-Proof Scalability: Ensures networks can grow to meet future AI demands without disruptive upgrades.

Cisco’s commitment to Ethernet could accelerate adoption compared to proprietary solutions, as AI becomes more distributed.

Conclusion

Cisco’s Silicon One P200 marks a pivotal advancement in AI networking. By delivering unmatched routing capacity, deep buffers, and deployment flexibility, Cisco empowers organizations to build the next generation of distributed AI infrastructure. As the industry shifts toward scale-across architectures, solutions like the P200 will be essential in overcoming the limitations of current networks.