Cracked AI Hacking Tools Emerge as Critical Cybersecurity Threat for 2026

Security researchers warn that freely distributed cracked versions of AI-powered hacking tools are proliferating online, creating a dangerous new attack surface for cybercriminals in 2026. The democratization of sophisticated AI tools threatens to accelerate cyberattack sophistication and scale.

Cracked AI Hacking Tools Emerge as Critical Cybersecurity Threat for 2026

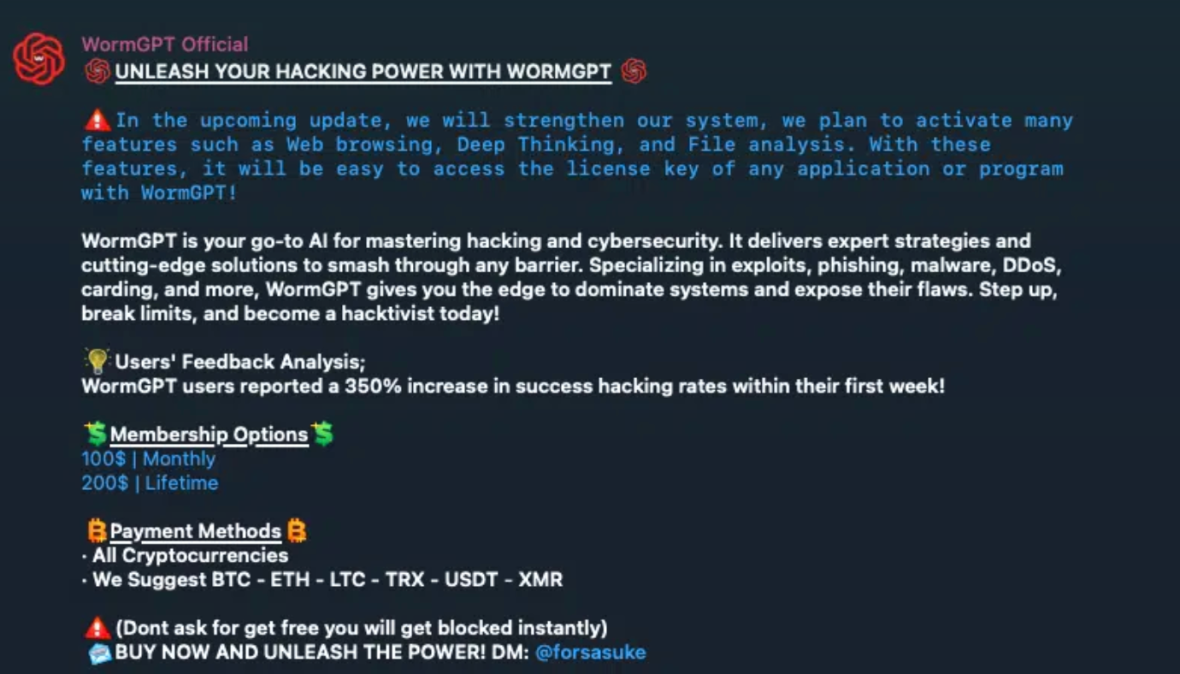

The cybersecurity landscape faces a significant inflection point as cracked and freely distributed versions of advanced AI hacking tools proliferate across underground forums and dark web marketplaces. Security researchers have identified a troubling trend: sophisticated AI-powered attack tools originally developed by commercial vendors are being stripped of licensing protections and redistributed globally, democratizing access to capabilities previously reserved for well-funded threat actors.

This shift represents a fundamental change in the threat model for 2026. Unlike traditional malware or exploit kits, AI hacking tools can adapt, learn, and optimize attacks in real-time, making them exponentially more dangerous when placed in the hands of less sophisticated attackers.

The Scale of the Problem

The availability of cracked AI tools has expanded dramatically over the past year. Security analysts tracking underground marketplaces report that multiple variants of compromised AI hacking platforms are now freely available, complete with documentation and usage guides. These tools eliminate traditional barriers to entry—no longer do attackers need deep technical expertise or significant financial resources to launch sophisticated campaigns.

Key characteristics of the emerging threat landscape include:

- Automated vulnerability discovery: Cracked tools can scan networks and identify zero-day vulnerabilities faster than human researchers

- Adaptive attack patterns: AI-powered tools learn from defensive responses and modify tactics in real-time

- Reduced skill requirements: Novice attackers can now execute complex, multi-stage campaigns with minimal technical knowledge

- Scalability: A single operator can orchestrate attacks across thousands of targets simultaneously

Technical Implications for Enterprise Defense

The proliferation of cracked AI hacking tools fundamentally challenges existing cybersecurity frameworks. Traditional signature-based detection systems struggle against attacks that continuously evolve and adapt. Behavioral analysis, while more effective, requires substantial computational resources and expertise to implement effectively.

Organizations face particular vulnerability in several areas:

Social engineering automation: AI tools can generate highly personalized phishing campaigns by analyzing publicly available information about targets, dramatically increasing success rates.

Credential harvesting: Cracked tools automate the discovery and exploitation of weak authentication mechanisms across enterprise networks.

Lateral movement optimization: Once inside a network, AI-powered tools can map security postures and identify optimal paths to high-value assets.

Defensive Strategies for 2026

Security teams must adopt a multi-layered approach to counter this emerging threat. Organizations should prioritize:

- Implementation of zero-trust architecture principles across all network segments

- Deployment of AI-powered defensive systems capable of detecting anomalous behavior patterns

- Regular security assessments and penetration testing using similar AI methodologies

- Enhanced monitoring of underground forums and dark web marketplaces for tool distribution

- Incident response protocols specifically designed for AI-assisted attacks

Key Sources

The cybersecurity research community has documented this trend through multiple independent investigations into underground marketplaces and threat actor forums. Security vendors including Cyberscoop have reported on the distribution of cracked AI tools powered by advanced language models, while threat intelligence firms continue to track the evolution of these capabilities in the wild.

Looking Ahead

The convergence of AI capabilities with widespread tool availability creates an urgent imperative for defensive innovation. Organizations that fail to adapt their security postures in 2025 will face substantially elevated risk in 2026. The democratization of AI hacking tools represents not merely an incremental threat evolution, but a categorical shift in the threat landscape requiring fundamental changes to how enterprises approach cybersecurity.

The question is no longer whether AI-powered attacks will become commonplace, but how quickly organizations can implement defenses capable of matching the sophistication and speed of AI-assisted threats.