Critical Security Vulnerabilities Discovered in Anthropic's Claude Cowork Agent

Anthropic's new Claude Cowork AI agent faces serious security flaws that could expose sensitive files and compromise enterprise systems. Security researchers have identified vulnerabilities in the file-handling capabilities that threaten early adopters.

The Vulnerability That Threatens Enterprise AI Adoption

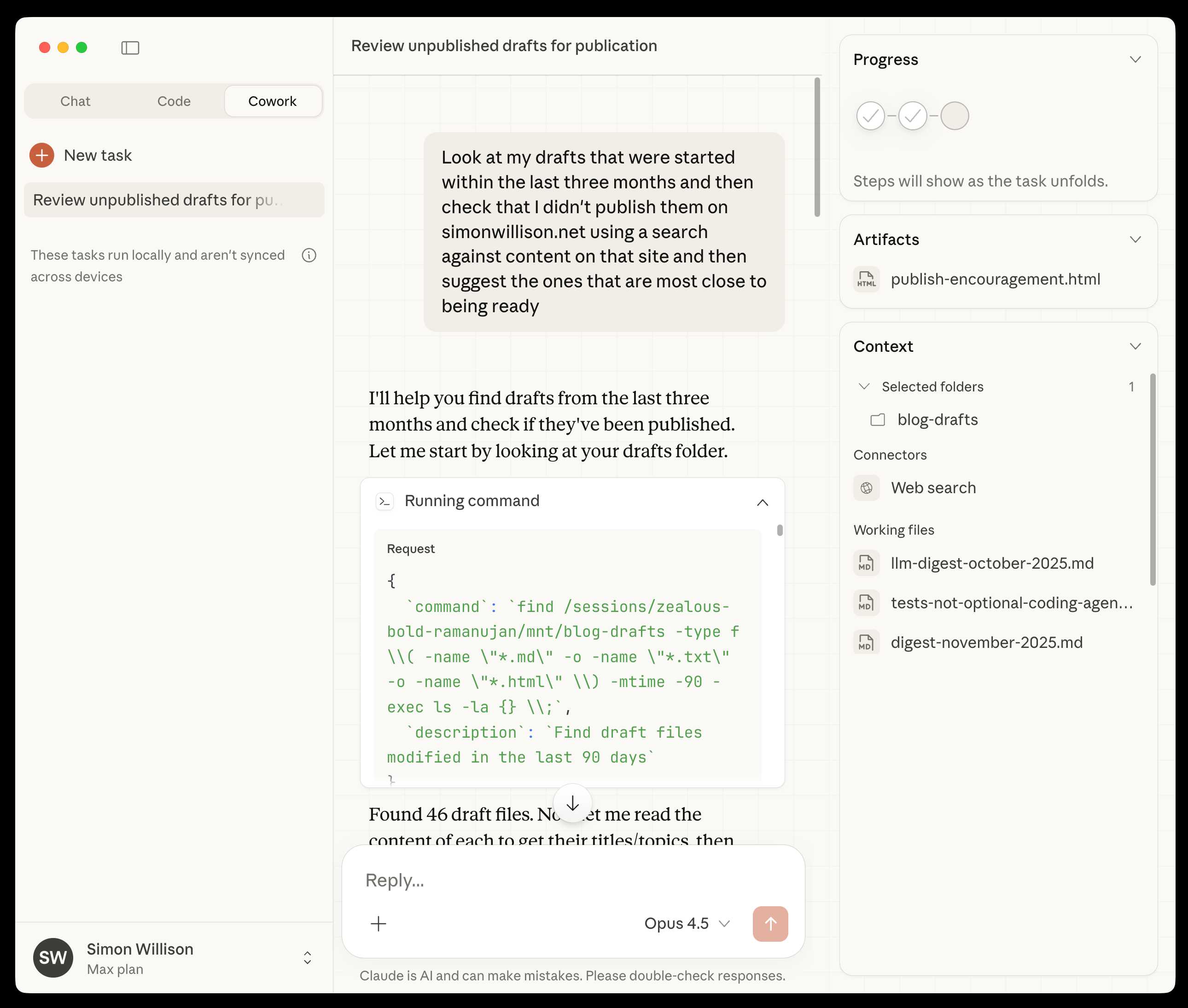

Anthropic's ambitious push into autonomous AI agents just hit a significant roadblock. Security researchers have uncovered critical vulnerabilities in Claude Cowork, the company's new general-purpose agent designed to handle file management and complex workflows. The flaws expose a fundamental tension in the race to deploy capable AI systems: the more autonomous these agents become, the greater the security risks they introduce.

Claude Cowork represents Anthropic's answer to competitors racing to build AI agents that can operate independently across enterprise systems. But early technical analysis reveals serious gaps in how the agent handles file access and permissions, raising questions about whether the system is ready for production deployment in sensitive environments.

What the Vulnerabilities Expose

The security issues center on Claude Cowork's file-management capabilities—the very feature that makes it attractive to enterprises. According to security researchers, the agent can inadvertently expose sensitive files and bypass intended access restrictions. This is particularly concerning for startups and organizations handling proprietary data, customer information, or regulated content.

Key vulnerability areas include:

- Insufficient permission validation: The agent may not properly verify file access rights before executing operations

- Path traversal risks: Potential for the agent to access files outside intended directories

- Credential exposure: Sensitive authentication tokens or API keys could be inadvertently logged or transmitted

- Local RAG vulnerabilities: Weaknesses in how the agent processes local retrieval-augmented generation systems could leak training data or indexed documents

The Broader Pattern

This isn't an isolated incident. Security Boulevard reports that vulnerabilities in Anthropic's Claude codebase have surfaced before, suggesting systemic issues in how the company approaches secure development practices for autonomous systems. The discovery raises uncomfortable questions: Are AI companies prioritizing capability over security? How thoroughly are these systems tested before release?

The timing is particularly awkward for Anthropic, which has positioned itself as the safety-conscious alternative in the AI arms race. The company has published research on healthcare and life sciences applications, sectors where security failures carry serious consequences.

What This Means for Enterprise Adoption

Organizations evaluating Claude Cowork for production use should exercise caution. The vulnerabilities suggest that autonomous agents operating across file systems require more rigorous security frameworks than current implementations provide. Until Anthropic releases comprehensive patches and security audits, enterprises handling sensitive data should treat Claude Cowork as experimental technology.

The incident also highlights a critical gap in the AI industry: the lack of standardized security testing frameworks for autonomous agents. Traditional software security practices may not adequately address the unique risks posed by systems that can make independent decisions about file access and data handling.

Anthropic has an opportunity to demonstrate leadership by treating these vulnerabilities as a wake-up call rather than a minor setback. The company's response—speed of patches, transparency about root causes, and commitment to security-first development—will determine whether Claude Cowork becomes a trusted enterprise tool or a cautionary tale about moving too fast in AI deployment.