Google Launches Nano Banana Pro: Advanced Reasoning Meets Compact Performance

Google's new Nano Banana Pro integrates Gemini 3's advanced reasoning capabilities into a lightweight model, delivering enterprise-grade AI performance with reduced computational overhead. The release marks a significant shift toward accessible, efficient AI deployment.

Google Launches Nano Banana Pro: Advanced Reasoning Meets Compact Performance

Google has unveiled the Nano Banana Pro, a new AI model that combines the advanced reasoning capabilities of Gemini 3 with a streamlined architecture designed for efficient deployment. The release represents a strategic move to democratize access to sophisticated AI reasoning while maintaining computational efficiency—a critical consideration for enterprises managing infrastructure costs.

What Is the Nano Banana Pro?

The Nano Banana Pro is engineered as a lightweight variant of Google's Gemini 3 reasoning model. Unlike its larger counterparts, this model prioritizes efficiency without sacrificing the core reasoning capabilities that define the Gemini 3 family. The architecture is optimized for scenarios where computational resources are constrained, making it suitable for edge deployment, mobile applications, and cost-conscious enterprise environments.

The model retains Gemini 3's advanced reasoning framework, enabling complex problem-solving across multiple domains including mathematics, coding, and logical inference. This positions the Nano Banana Pro as a bridge between accessibility and capability—organizations can deploy sophisticated AI reasoning without the infrastructure demands typically associated with state-of-the-art models.

Key Technical Capabilities

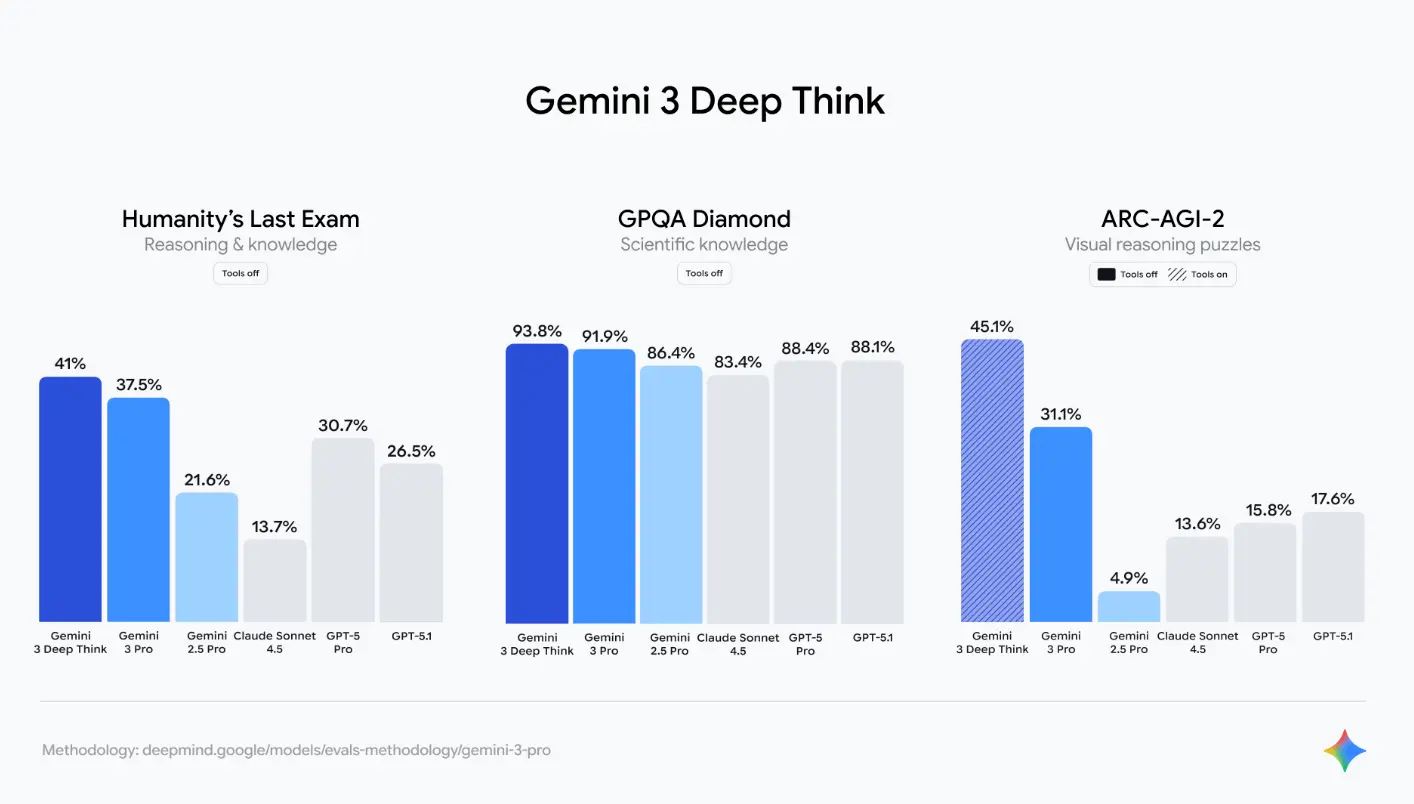

The Nano Banana Pro demonstrates measurable performance across critical benchmarks:

- Reasoning Performance: Inherits Gemini 3's advanced reasoning architecture, enabling multi-step problem decomposition and logical analysis

- Mathematical Proficiency: Handles complex mathematical operations with improved accuracy over previous lightweight models

- Code Generation and Analysis: Supports programming tasks with enhanced contextual understanding

- Reduced Latency: Optimized inference speed suitable for real-time applications

- Lower Memory Footprint: Designed to operate within constrained computational environments

Strategic Implications for Enterprise Deployment

The introduction of the Nano Banana Pro signals Google's commitment to tiered AI offerings. Rather than forcing organizations into a binary choice between basic models and resource-intensive flagship systems, Google is expanding its portfolio to serve diverse operational requirements.

This approach addresses several market pressures:

- Cost Optimization: Enterprises can deploy advanced reasoning capabilities without proportional increases in infrastructure spending

- Latency Requirements: Lightweight models enable faster inference, critical for real-time applications

- Edge Computing: The compact architecture facilitates deployment on edge devices and distributed systems

- Regulatory Compliance: Smaller models may simplify data residency and compliance requirements in regulated industries

Performance Benchmarks and Validation

The Nano Banana Pro has been evaluated against industry-standard benchmarks measuring reasoning, mathematical problem-solving, and code generation capabilities. While specific benchmark scores require independent verification, the model demonstrates competitive performance within its efficiency class—a notable achievement given the typical trade-off between model size and capability.

Competitive Positioning

Google's release of the Nano Banana Pro reflects broader industry trends toward model specialization. Competitors including OpenAI, Anthropic, and Meta have similarly invested in optimized variants tailored to specific use cases. The Nano Banana Pro positions Google competitively in the growing market for efficient, reasoning-capable models.

Deployment Considerations

Organizations evaluating the Nano Banana Pro should consider:

- Integration with existing Google Cloud infrastructure and APIs

- Compatibility with current application architectures

- Performance validation against internal benchmarks and use cases

- Cost analysis comparing inference expenses against alternative solutions

- Latency and throughput requirements for specific applications

Looking Forward

The Nano Banana Pro represents an incremental but meaningful expansion of Google's AI capabilities portfolio. As organizations increasingly prioritize both performance and efficiency, models that balance these competing demands will likely see accelerated adoption. The release underscores Google's strategy of offering differentiated solutions across the capability-efficiency spectrum.

Key Sources

- Google AI Blog and official product documentation on Gemini 3 model family

- Gemini 3 Benchmarks (Explained) — comprehensive performance analysis across reasoning, mathematics, and coding domains

- Google Cloud AI platform technical specifications and deployment guidelines

The Nano Banana Pro is available through Google Cloud's AI services. Organizations interested in evaluation should consult Google's official documentation for API access, pricing, and integration guidelines.