Grok AI Faces Major Service Outage, Leaving Thousands Unable to Access Chatbot

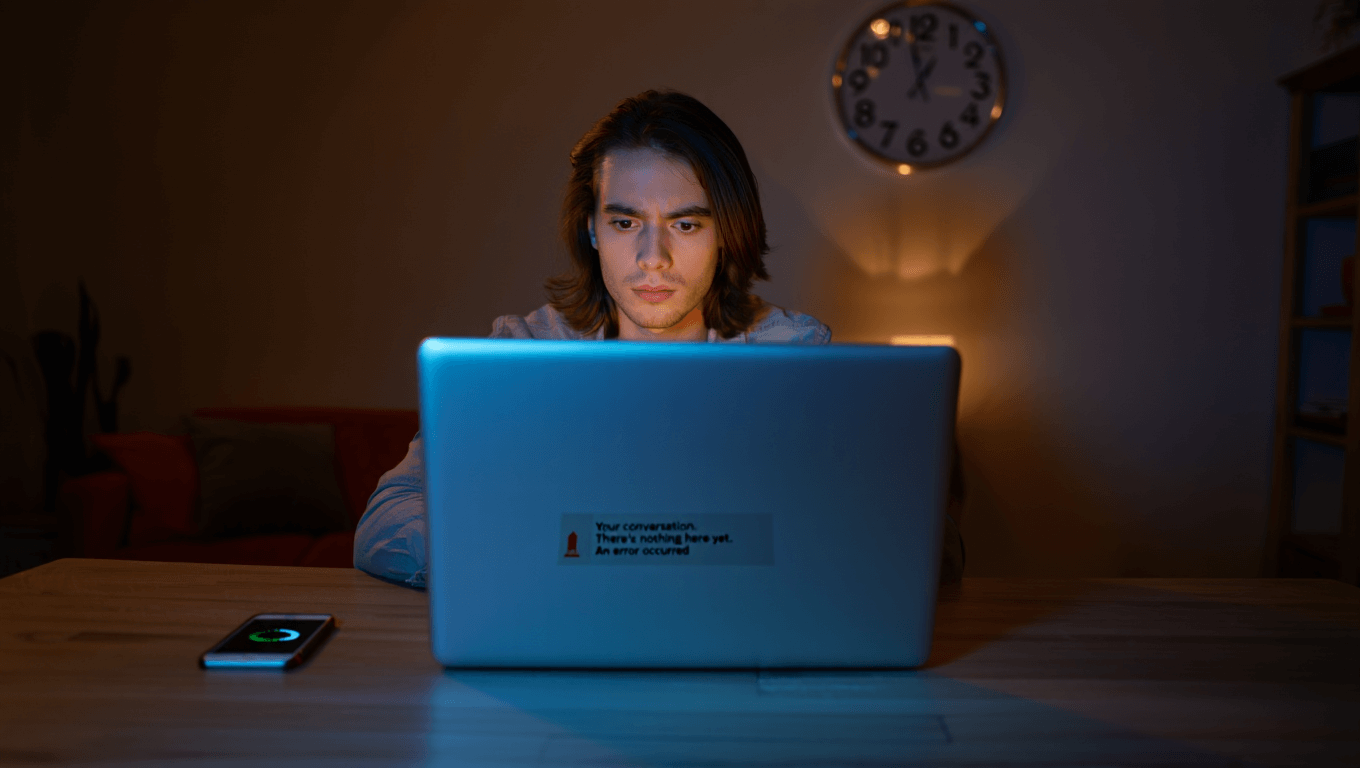

Grok AI experienced a significant blackout affecting thousands of users worldwide, with both the web platform and mobile application becoming unresponsive. The outage raised questions about service reliability and infrastructure resilience for the emerging AI chatbot platform.

Grok AI Experiences Widespread Service Disruption

Grok AI, the AI chatbot service operated by xAI, suffered a major outage that rendered its platform inaccessible to thousands of users across multiple regions. Both the web-based interface and mobile applications became unresponsive during the incident, preventing users from accessing the service's core functionality.

The outage impacted users attempting to interact with Grok's conversational AI features, with reports indicating that requests were met with error messages and failed connection attempts. The scope of the disruption extended across different access points, suggesting a systemic infrastructure issue rather than isolated regional problems.

Timeline and Impact

The blackout affected a significant portion of Grok's active user base during peak usage hours. Users reported being unable to load the platform, submit queries, or receive responses from the chatbot service. The widespread nature of the outage prompted immediate attention from the platform's operations team.

Service disruptions of this magnitude typically stem from several potential causes:

- Infrastructure failures affecting core servers or data centers

- Database connectivity issues preventing query processing

- Load balancing problems during traffic spikes

- Deployment-related incidents from recent updates or maintenance

- Third-party service dependencies experiencing failures

Technical Analysis

Outages affecting AI chatbot platforms present unique challenges due to the computational resources required to maintain real-time inference capabilities. Unlike traditional web services, AI platforms must manage:

- Continuous model inference across distributed GPU clusters

- Real-time token generation and streaming responses

- High-concurrency request handling with strict latency requirements

- Persistent session management for ongoing conversations

The scale of infrastructure required to support thousands of concurrent users places significant demands on system reliability and redundancy protocols. Any breakdown in these systems can result in cascading failures that affect the entire user base.

Service Reliability Considerations

For emerging AI platforms competing in a crowded market, service reliability represents a critical differentiator. Users expect consistent uptime comparable to established cloud services, with transparent communication during incidents. Extended outages can erode user confidence and drive migration to competing platforms.

The incident highlights the operational complexity of maintaining large-scale AI services. Unlike traditional SaaS applications, AI chatbots require specialized infrastructure, sophisticated monitoring systems, and rapid incident response capabilities to minimize downtime.

Recovery and Response

Platform operators typically implement incident response protocols that include:

- Immediate escalation to infrastructure and engineering teams

- Real-time monitoring of system metrics and error rates

- Communication updates to affected users

- Root cause analysis following service restoration

- Post-incident reviews to prevent recurrence

The speed of recovery and quality of user communication during outages significantly impact user retention and platform reputation.

Key Sources

- Grok AI Service Status and User Reports

- xAI Platform Infrastructure Documentation

- Industry Analysis of AI Chatbot Service Reliability

Looking Forward

Service outages, while disruptive, provide valuable data for platform operators to strengthen infrastructure resilience. The incident underscores the importance of robust redundancy, comprehensive monitoring, and proactive capacity planning for AI platforms serving large user bases.

As AI chatbot adoption accelerates, users increasingly expect enterprise-grade reliability from these services. Platforms that consistently deliver uptime and transparent incident communication will maintain competitive advantages in the rapidly evolving AI market.