Major AI Chatbots Fail Critical Mental Health Safety Standards for Adolescents

Recent assessments reveal that leading AI chatbots including ChatGPT, Claude, and Gemini fall short of essential mental health safety benchmarks designed to protect vulnerable teenage users, raising urgent questions about their deployment in youth mental health support.

Major AI Chatbots Fail Critical Mental Health Safety Standards for Adolescents

Recent independent assessments have exposed significant gaps in the mental health safety protocols of leading AI chatbots when tested against standards designed to protect adolescent users. ChatGPT, Claude, Gemini, and other mainstream conversational AI systems demonstrated concerning shortcomings in their ability to handle sensitive mental health scenarios involving teenagers, according to technical safety evaluations.

The findings underscore a critical disconnect between the rapid adoption of AI chatbots by young people seeking mental health information and the actual safeguards these systems possess to protect vulnerable populations.

The Safety Assessment Gap

Mental health safety assessments for adolescents typically evaluate whether AI systems can:

- Recognize crisis indicators and suicidal ideation

- Appropriately escalate to human professionals

- Avoid providing harmful therapeutic advice

- Maintain appropriate boundaries in sensitive conversations

- Refuse requests that could exacerbate mental health conditions

When major chatbots were tested against these criteria, results revealed inconsistent performance across platforms. Some systems failed to recognize warning signs of self-harm, while others provided generic responses that lacked the nuance required for adolescent mental health contexts.

Why This Matters for Teen Users

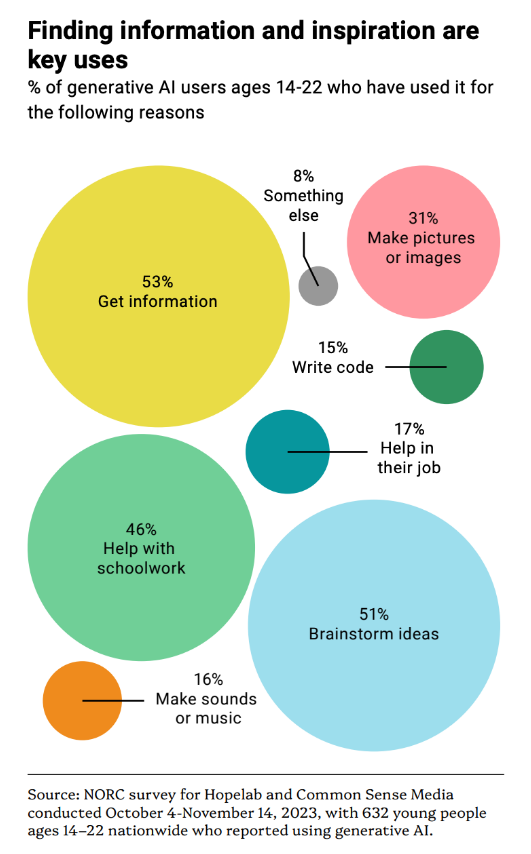

Adolescents increasingly turn to AI chatbots for mental health information and emotional support. Survey data indicates that a substantial portion of teenagers now use AI tools for schoolwork and information-seeking, with many extending this usage to personal and mental health questions. This trend reflects both the accessibility of these tools and a potential gap in traditional mental health resources available to young people.

However, the systems they're accessing were not specifically designed or validated for adolescent mental health support. Most major chatbots receive general safety training but lack specialized protocols for the developmental, emotional, and psychological needs of teenagers.

Key Technical Shortcomings

Crisis Recognition Failures: Several systems struggled to identify acute mental health crises in test scenarios, instead offering generic coping strategies that delayed appropriate intervention.

Boundary Violations: Some chatbots continued engaging in therapeutic-style conversations beyond appropriate limits, potentially creating false impressions of professional mental health support.

Inconsistent Escalation: Systems showed variable performance in recognizing when situations required human professional intervention, with some failing to suggest crisis resources entirely.

Age-Inappropriate Responses: Responses designed for adult users were sometimes delivered to adolescents without modification, missing developmental considerations critical to teen mental health.

The Regulatory and Ethical Landscape

These findings arrive as regulators and mental health organizations increasingly scrutinize AI deployment in sensitive domains. The lack of standardized safety benchmarks for adolescent-focused AI interactions has created a situation where commercial chatbots operate with minimal oversight in a space where vulnerable users congregate.

Mental health professionals have raised concerns that inadequately designed AI systems could inadvertently cause harm through mishandling of disclosures, normalization of untreated conditions, or substitution of AI interaction for necessary professional care.

Path Forward

Addressing these gaps will require collaboration between AI developers, mental health experts, and regulatory bodies to establish:

- Adolescent-specific safety standards for mental health conversations

- Mandatory testing protocols before deployment

- Clear labeling of AI limitations in mental health contexts

- Integration of crisis recognition and escalation pathways

- Regular independent auditing of safety performance

The current situation reflects a broader challenge in AI development: the speed of deployment often outpaces the establishment of safety frameworks, particularly for vulnerable populations. As chatbot usage among teenagers continues to grow, closing these safety gaps has become an urgent priority.

Key Sources

- Mental health safety assessment frameworks for adolescent AI interactions

- Chatbot usage statistics among teenage populations

- Independent technical audits of major AI systems' crisis response capabilities