Microsoft Study Reveals Users Turning to Copilot for Personal Health and Relationship Guidance

A new Microsoft study shows a significant shift in user behavior, with increasing numbers relying on Copilot for advice on sensitive personal matters including health concerns and relationship issues, raising important questions about the role of AI in intimate decision-making.

Users Increasingly Seek Personal Advice from Copilot

A recent Microsoft study has documented a notable trend: users are increasingly turning to Copilot for guidance on deeply personal matters, including health concerns and relationship advice. The research highlights a fundamental shift in how people approach decision-making in areas traditionally reserved for professionals, family, or trusted confidants.

The study underscores the growing reliance on large language models as a first point of contact for sensitive inquiries. Rather than immediately consulting healthcare providers or relationship counselors, users are leveraging Copilot's accessibility and perceived neutrality to explore questions about wellness, medical symptoms, and interpersonal dynamics.

Key Findings from the Research

The Microsoft data reveals several important patterns:

- Health Inquiries: Users are querying Copilot about medical symptoms, treatment options, and wellness strategies with increasing frequency

- Relationship Matters: Questions about dating, marriage, family dynamics, and interpersonal conflict represent a significant portion of personal advice requests

- Accessibility Factor: The 24/7 availability and non-judgmental nature of the AI assistant make it an attractive alternative to scheduling appointments or seeking human counsel

- Demographic Spread: The trend spans multiple age groups and geographic regions, indicating broad adoption across user bases

Implications for AI and Professional Services

This behavioral shift carries significant implications for both the technology sector and traditional professional services. While Copilot can provide general information and perspective, the study implicitly raises questions about the appropriateness of relying on AI systems for decisions with real-world health and relationship consequences.

Microsoft has positioned Copilot as a productivity and information tool, yet the research demonstrates that users are extending its role into domains where professional expertise, clinical judgment, and human empathy traditionally play critical roles. The distinction between information provision and professional advice becomes increasingly blurred in practical user behavior.

Considerations for Users

Those leveraging Copilot for personal guidance should recognize several limitations:

- AI systems operate based on training data and cannot provide personalized medical diagnosis

- Relationship advice from algorithms lacks the contextual understanding a therapist or counselor brings

- Critical health decisions should always involve qualified medical professionals

- Privacy considerations apply when discussing sensitive personal information with any digital system

The Broader Context

The study reflects a wider phenomenon of AI integration into daily life. As large language models become more sophisticated and accessible through mobile applications and web interfaces, their use cases naturally expand beyond initial design parameters. Users discover new applications for these tools based on their needs and the tools' apparent capabilities.

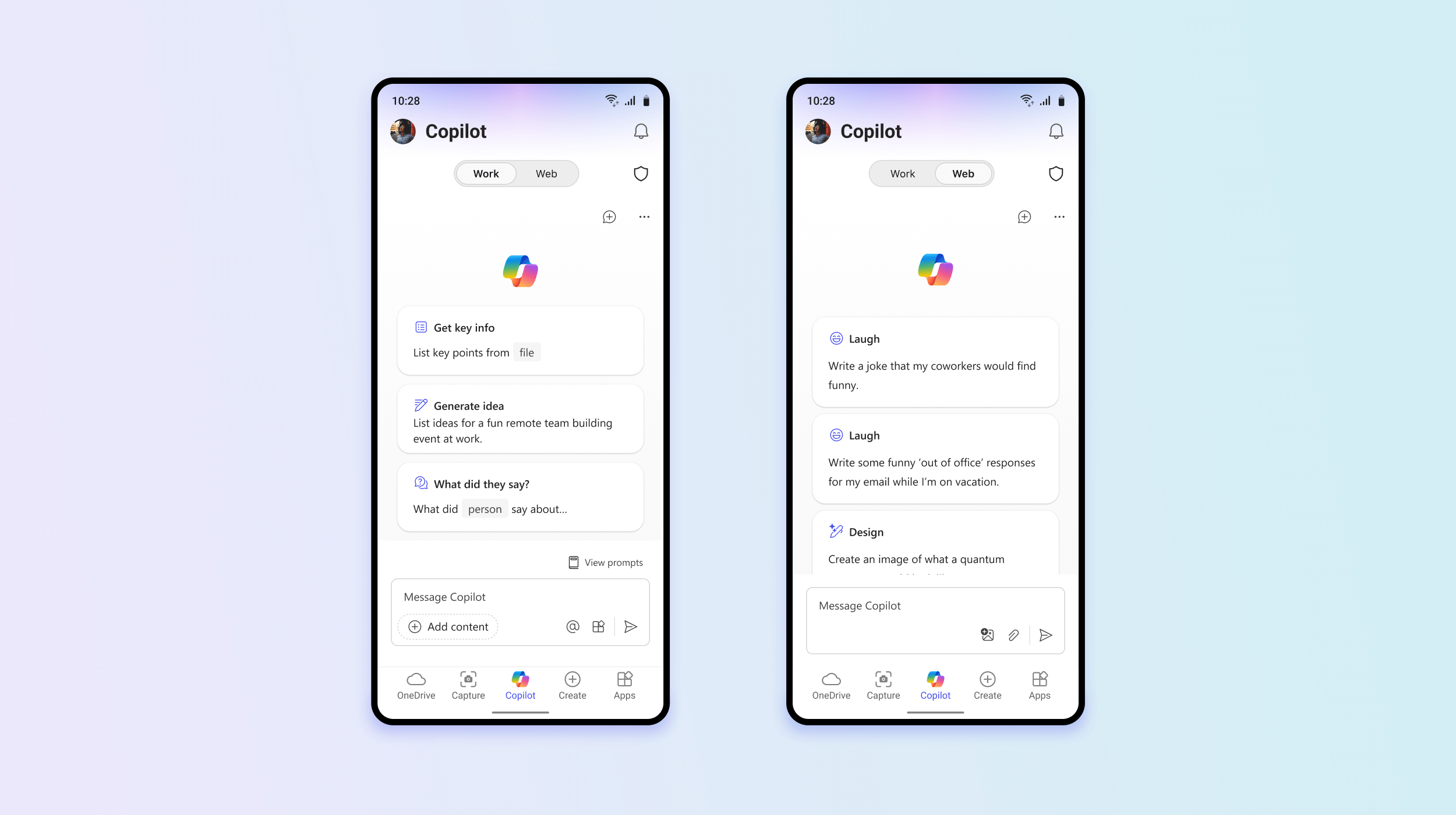

Microsoft's Copilot, available across multiple platforms including mobile devices and web browsers, has become increasingly embedded in user workflows. The research suggests that this accessibility is driving adoption patterns that extend well beyond workplace productivity—the original focus of the Copilot initiative.

Looking Forward

The findings present both opportunities and challenges. On one hand, Copilot can democratize access to information and provide a judgment-free space for initial exploration of personal concerns. On the other hand, the trend underscores the need for clearer guidelines about appropriate use cases and the importance of maintaining human expertise in sensitive domains.

As AI systems continue to evolve and integrate into everyday life, understanding how users actually employ these tools—versus how designers intended them to be used—becomes essential for both responsible development and informed user behavior.

Key Sources

- Microsoft Study on Copilot Usage Patterns and User Behavior

- Microsoft Copilot Platform Documentation and Capabilities

- Industry Analysis on AI Integration in Personal Decision-Making