OpenAI Economist Resigns Over Pressure to Downplay AI Job Displacement Risks

A senior economist at OpenAI has resigned, citing internal pressure to minimize public discussion of the significant employment risks posed by advanced AI systems. The departure raises questions about corporate accountability in addressing labor market disruption.

OpenAI Economist Resigns Over Pressure to Downplay AI Job Displacement Risks

A senior economist at OpenAI has stepped down from their position, citing organizational pressure to downplay the risks of job losses resulting from artificial intelligence deployment. The resignation underscores growing tensions between AI companies' public messaging and internal assessments of labor market disruption.

The Resignation and Its Implications

The economist's departure signals a potential rift between OpenAI's corporate interests and candid economic analysis regarding AI's employment impact. By resigning rather than continuing under constraints, the economist has drawn attention to what may be systemic pressure within leading AI organizations to present an optimistic narrative about workforce transitions.

This move reflects broader concerns within the tech industry about the gap between public statements and private assessments. Companies developing transformative technologies face competing pressures: shareholders expect growth narratives, while stakeholders demand honest risk evaluation.

Understanding AI Job Displacement Risks

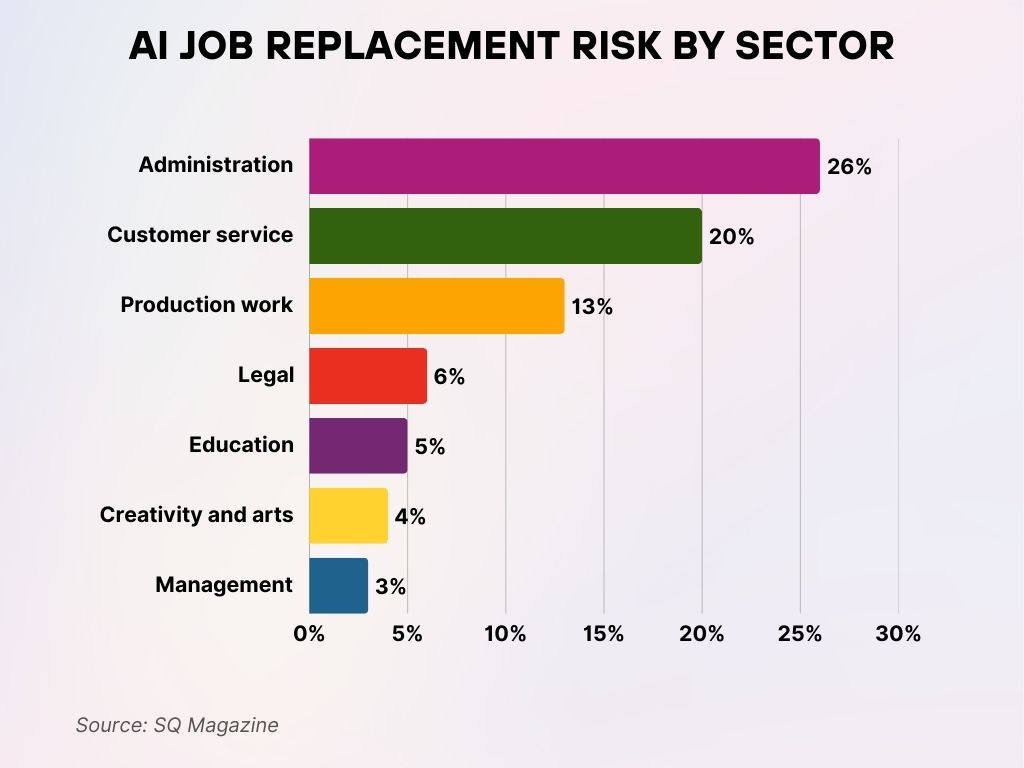

The concerns raised are substantive. Research indicates that AI systems are capable of automating tasks across multiple sectors, from knowledge work to service industries. The scale and speed of potential displacement remain uncertain, but economists generally acknowledge that some job categories face genuine disruption risk.

Key areas of concern include:

- Administrative and clerical roles — vulnerable to automation through document processing and data management

- Customer service positions — increasingly handled by AI chatbots and automated systems

- Data analysis and reporting — tasks that AI can perform with growing efficiency

- Content creation and editing — areas where generative AI shows particular capability

- Routine programming tasks — facing competition from AI coding assistants

The challenge for policymakers and organizations is distinguishing between temporary displacement (requiring retraining) and permanent job elimination (requiring structural economic adjustment).

Corporate Accountability in AI Development

The resignation raises important questions about how AI companies should communicate regarding labor market impacts. There are legitimate business reasons for measured messaging—panic about job losses could trigger regulatory backlash or market instability. However, suppressing honest economic analysis creates different risks:

- Policy blindness — governments cannot prepare workforce transition programs without accurate risk assessment

- Credibility erosion — if companies are later proven to have downplayed risks, public trust deteriorates

- Talent concerns — employees with integrity may leave organizations perceived as dishonest about impacts

The Path Forward

OpenAI and similar organizations face a strategic choice. They can either:

- Engage transparently with economic research on AI labor impacts, acknowledging uncertainties while supporting evidence-based policy

- Continue managed messaging, risking eventual credibility loss and regulatory intervention

- Invest in solutions, funding retraining programs and economic transition support to demonstrate commitment beyond rhetoric

The economist's resignation suggests that at least some professionals within these organizations believe transparency is both ethically necessary and strategically important.

Key Sources

- Internal OpenAI communications and economist statements regarding labor impact assessments

- Economic research on AI automation capabilities across industry sectors

- Published analyses of job displacement risk by employment category

The broader conversation about AI's economic impact will likely intensify as deployment accelerates. How companies like OpenAI handle internal dissent on these issues may set important precedents for corporate responsibility in transformative technology development.