Unseen Threats: Navigating Prompt Injection in AI

Explore the emerging threat of prompt injection in AI, a sophisticated attack exploiting language models to execute unauthorized actions and disclose data.

Unseen Threats: Navigating Prompt Injection in AI

Prompt injection has emerged as a critical cybersecurity threat in the rapidly expanding field of artificial intelligence, particularly targeting large language models (LLMs) and generative AI systems. This novel form of attack involves inserting malicious instructions covertly into the input data processed by AI, tricking these systems into executing unauthorized actions or disclosing sensitive information. As AI tools proliferate—over 2,000 generative AI applications are currently in use worldwide—the security risks posed by prompt injection are becoming increasingly urgent for both developers and users.

Understanding Prompt Injection: How Invisible Commands Hijack AI

Prompt injection exploits the fundamental operational mechanics of LLMs, which process all input text as instructions or content to generate responses. Unlike traditional cyberattacks that require malware or code exploitation, prompt injection relies solely on crafted natural language commands embedded within user inputs or external data sources. For example, an attacker might embed an instruction hidden in innocuous-seeming text—such as white text on a white background on a webpage—that tells the AI to override its original directives and disclose confidential data or perform harmful tasks.

This vulnerability arises because LLMs do not inherently distinguish between legitimate system prompts (guiding their behavior) and user content. Consequently, attackers can manipulate the AI’s response by injecting adversarial prompts that instruct the model to ignore safety filters or perform unauthorized actions. Such attacks can be direct, where malicious commands are explicitly embedded in the input, or indirect, where harmful instructions are concealed within external documents, APIs, or web content that the AI processes.

Why Prompt Injection Is Considered the New Phishing

Security experts increasingly view prompt injection as the next evolution of phishing attacks. Historically, phishing exploited human trust by sending deceptive emails to trick users into revealing credentials or installing malware. Prompt injection, by contrast, targets the AI systems themselves rather than people, using cleverly crafted language to bypass AI safety mechanisms and controls. This makes it potentially more dangerous because the AI can autonomously execute harmful commands without human intervention.

Cornell University research demonstrated that even simple instructions such as "Ignore all previous instructions" could hijack model behavior, overriding built-in safeguards. This underlines how prompt injection attacks are not dependent on technical hacking skills but on linguistic creativity and understanding of AI behavior, making them accessible to a broader range of attackers.

The Growing Threat Landscape and Industry Response

With the AI market projected to reach $1.3 trillion by 2032, the stakes for securing these systems are exceptionally high. Prompt injection can lead to data leaks, unauthorized actions, and manipulation of AI-driven decision-making in critical sectors such as finance, healthcare, and government.

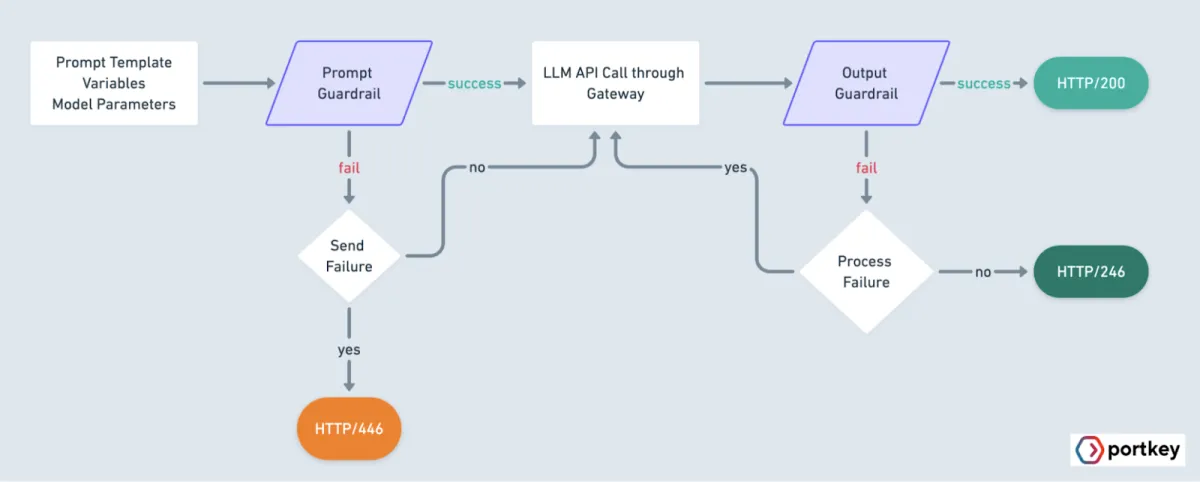

Major technology companies, including Microsoft, are investing heavily in deterministic security measures—rigorous, rule-based guardrails designed to be unbreakable by prompt injections. These include:

- Enhanced prompt filtering and sanitization to detect and remove adversarial inputs.

- Context isolation techniques to separate system instructions from user content.

- Use of secondary AI models to monitor and intercept suspicious prompts.

- Robust security benchmarks that simulate injection attacks to evaluate defenses.

However, researchers acknowledge that current defenses are imperfect. Many mitigation strategies either reduce AI utility or fail to address all attack vectors, particularly indirect prompt injections hidden in external data sources.

Implications for AI Safety and Cybersecurity Policy

The rise of prompt injection highlights a broader challenge: as AI becomes embedded in critical infrastructure and daily life, ensuring these systems cannot be manipulated is paramount. The G7 Cyber Expert Group recently emphasized prompt injection as a key AI-related cyber risk, alongside data poisoning and AI-powered phishing. They advocate for coordinated efforts to develop standards and regulations that address AI’s unique vulnerabilities while fostering innovation.

In practice, this means AI developers must build security from the ground up—integrating fail-safe mechanisms that guarantee the model adheres to ethical guidelines and confidentiality requirements regardless of input. It also calls for continuous collaboration between cybersecurity experts, AI researchers, and policymakers to keep pace with evolving threats.

Visualizing the Threat

Images relevant to this topic include:

- Diagrams illustrating the mechanics of direct and indirect prompt injection attacks, showing how malicious instructions are embedded and executed by AI systems.

- Screenshots of AI interfaces under attack by prompt injection prompts to demonstrate real-world exploitation scenarios.

- Logos of key companies investing in AI security, such as Microsoft, alongside graphics representing AI security layers and guardrails.

Prompt injection represents a sophisticated and stealthy threat that capitalizes on the very flexibility and linguistic understanding that make AI models powerful. As AI continues its integration into society’s fabric, addressing the vulnerabilities exposed by prompt injection is crucial to safeguarding data, privacy, and trust in AI technologies.