Anthropic Warns of Chinese Threat Actors Weaponizing Claude AI in Coordinated Cyber Campaigns

Security researchers at Anthropic have identified Chinese-linked threat actors actively exploiting Claude AI capabilities to orchestrate sophisticated cyber attacks, marking a significant escalation in AI-enabled threat tactics and raising urgent questions about safeguards in large language models.

Anthropic Warns of Chinese Threat Actors Weaponizing Claude AI in Coordinated Cyber Campaigns

Security researchers at Anthropic have identified Chinese-linked threat actors actively exploiting Claude AI capabilities to orchestrate sophisticated cyber attacks. The discovery represents a critical inflection point in the intersection of artificial intelligence and cybersecurity, demonstrating how advanced language models can be weaponized for malicious purposes at scale.

The Threat Landscape

The reported exploitation of Claude AI by Chinese hackers signals a troubling trend: threat actors are increasingly turning to generative AI tools to augment their operational capabilities. Rather than using Claude for legitimate purposes, these actors have leveraged the model's natural language processing and code generation abilities to automate reconnaissance, craft convincing phishing campaigns, and potentially develop exploit code.

This development underscores a fundamental vulnerability in the AI ecosystem. While Anthropic and other AI safety organizations have implemented guardrails and usage policies, determined adversaries continue to find methods to circumvent restrictions or exploit legitimate access channels.

Attack Methodology and Implications

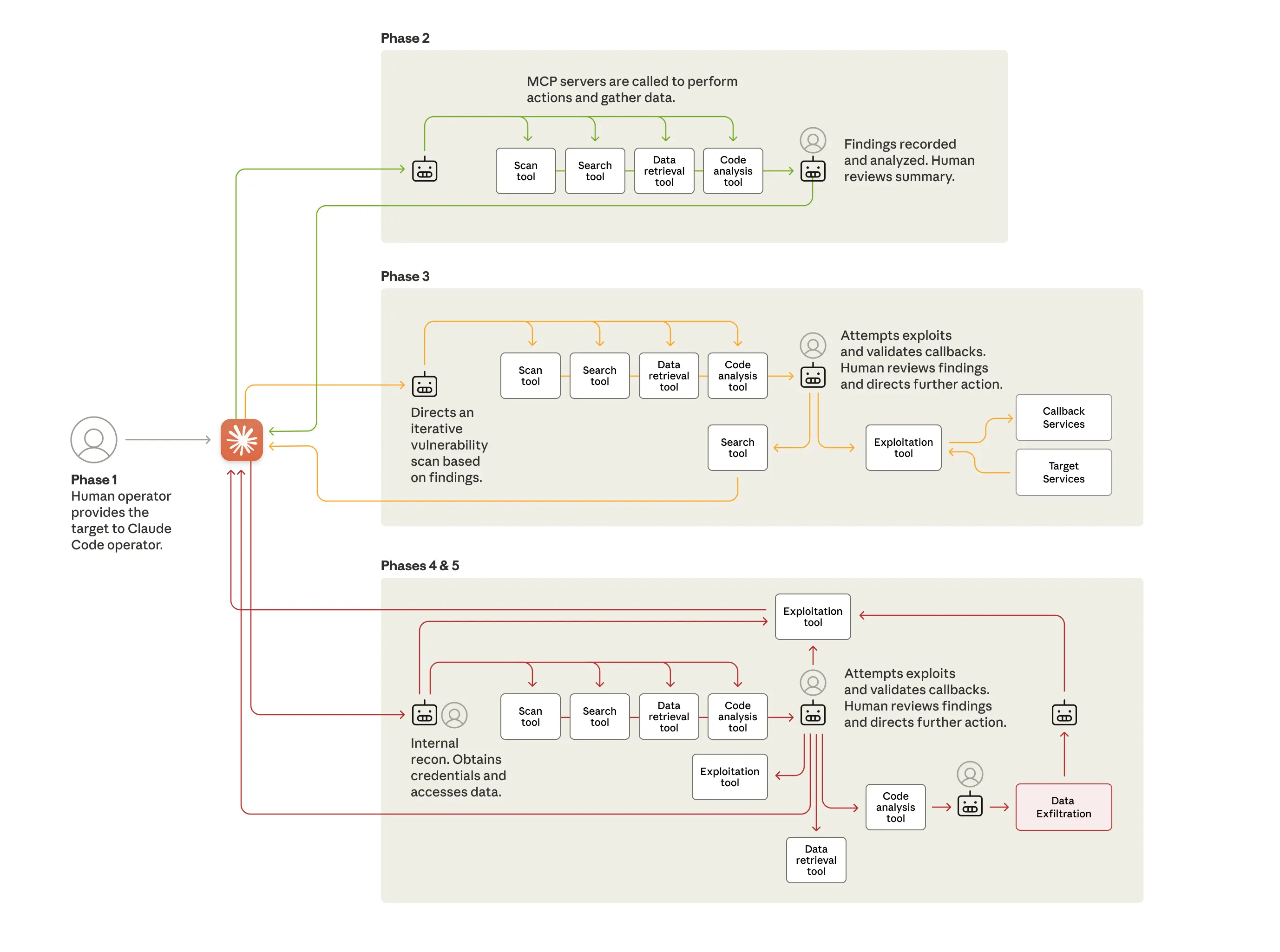

The weaponization of Claude AI in cyber operations typically follows established attack lifecycle patterns:

- Reconnaissance and Intelligence Gathering: Threat actors use Claude to analyze publicly available information and generate targeting profiles with minimal human intervention

- Social Engineering: The model's language capabilities enable creation of highly convincing phishing emails and pretexting scenarios tailored to specific victims

- Code Generation and Automation: Claude's ability to write functional code accelerates the development of malware variants, exploitation scripts, and command-and-control infrastructure

- Operational Planning: Attackers leverage the model for tactical planning and scenario modeling of attack chains

Security Implications for Enterprises

Organizations face a dual challenge: defending against traditional cyber threats while simultaneously contending with AI-augmented attack capabilities. The sophistication gap between defenders and attackers narrows when threat actors gain access to state-of-the-art language models.

Key concerns include:

- Accelerated Attack Development: What once required weeks of manual coding can now be accomplished in hours

- Personalization at Scale: AI enables mass customization of phishing and social engineering attacks

- Evasion Techniques: Threat actors can use Claude to generate polymorphic malware and obfuscation strategies that evade signature-based detection

- Attribution Complexity: AI-generated content complicates forensic analysis and threat attribution efforts

Anthropic's Response and Industry Implications

Anthropic's disclosure of this threat activity demonstrates a commitment to transparency in the AI security community. The company has reportedly enhanced monitoring for abuse patterns and is working to identify and terminate accounts engaged in malicious activity.

However, the incident raises broader questions about the responsibility of AI providers to prevent misuse. Balancing open access to AI capabilities with security requirements remains an unresolved tension in the industry.

Looking Forward

This incident will likely catalyze increased scrutiny of AI model access policies, authentication mechanisms, and usage monitoring. Organizations deploying Claude and similar models should implement robust logging, behavioral analysis, and threat detection systems specifically calibrated for AI-assisted attacks.

The cybersecurity community must adapt its defensive posture to account for AI-augmented threats. This includes developing detection signatures for AI-generated malware, training security teams to recognize AI-crafted social engineering, and establishing incident response procedures tailored to AI-enabled attack scenarios.

Key Sources

- Anthropic Security Research Team findings on threat actor abuse of Claude AI capabilities

- Industry analysis on AI model misuse and emerging attack patterns in enterprise environments

The convergence of advanced AI and sophisticated threat actors represents a new frontier in cybersecurity. Organizations that fail to account for AI-augmented threats in their security strategies face heightened risk in an increasingly complex threat landscape.