DeepMind CEO Challenges LLM Path to AGI, Argues World Models Are Essential

DeepMind's leadership questions whether large language models alone can achieve artificial general intelligence, pivoting focus toward world models as the missing piece in the AGI puzzle.

The AGI Debate Heats Up: LLMs Aren't Enough

The race for artificial general intelligence just hit a critical inflection point. While Silicon Valley continues betting billions on scaling up large language models, DeepMind's CEO is pushing back hard, arguing that LLMs alone cannot bridge the gap to true AGI. This isn't mere academic disagreement—it's a fundamental challenge to the scaling hypothesis that has dominated AI development for the past three years.

The tension reflects a deeper schism in the AI research community. According to recent industry analysis, the debate between scaling advocates and their skeptics has intensified as models hit diminishing returns on raw parameter increases. DeepMind's position signals that the next breakthrough won't come from simply making models bigger, but from fundamentally rethinking how machines understand the world.

Why World Models Matter More Than Token Prediction

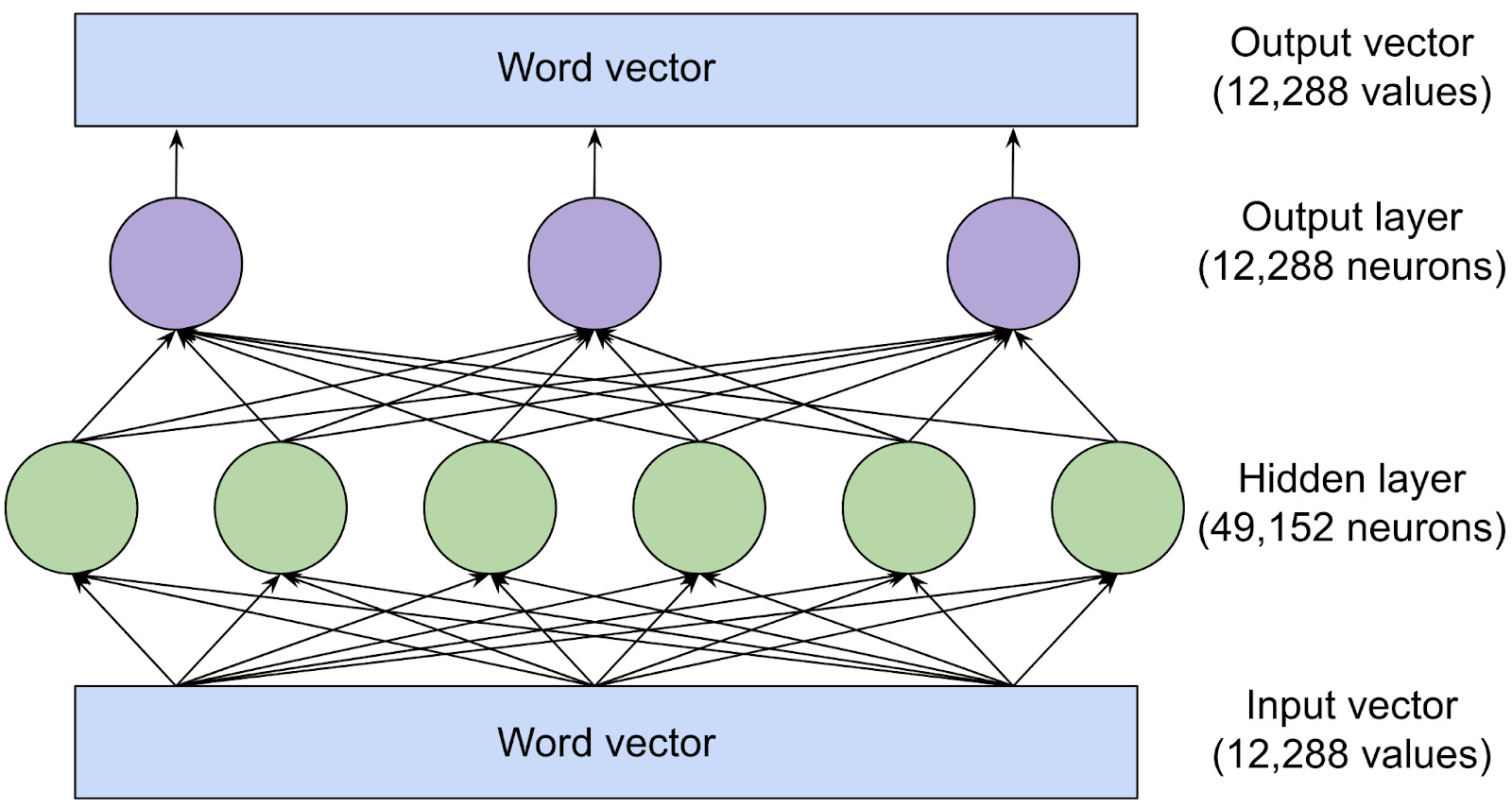

The core argument centers on a critical distinction: large language models excel at pattern matching and statistical prediction, but they lack something humans possess naturally—a coherent model of how the physical world works.

Key differences between LLMs and world models:

- LLMs: Process text sequentially, predict next tokens based on statistical patterns

- World Models: Build internal representations of cause-and-effect relationships, physics, and spatial reasoning

- The Gap: LLMs can discuss physics without understanding it; world models would actually comprehend it

DeepMind's research direction increasingly focuses on integrating world models with language understanding. This hybrid approach could enable AI systems to reason about novel scenarios rather than merely interpolating from training data.

The Competitive Landscape Shifts

This philosophical pivot has real implications for the AI arms race. While OpenAI and other LLM-focused labs continue scaling GPT-style architectures, DeepMind is signaling a different path forward. The company's emphasis on world models aligns with broader industry skepticism about whether current approaches can achieve genuine AGI.

The global AI competition remains intense, with DeepMind's CEO warning that the window for American dominance is narrower than many assume. However, the real competition may not be about who scales fastest, but who solves the world model problem first.

What This Means for the Industry

The implications are substantial. If DeepMind is correct, the next generation of AI breakthroughs won't come from training larger models on more data. Instead, they'll emerge from:

- Architectural innovation: New ways to integrate symbolic reasoning with neural networks

- Multimodal learning: Systems that learn from vision, language, and interaction simultaneously

- Causal reasoning: Moving beyond correlation to genuine understanding of cause-and-effect

The broader conversation about AI's future suggests we're at an inflection point. The scaling era may be ending, replaced by an era focused on architectural depth and reasoning capability.

The Skepticism Question

It's worth noting that the debate over what constitutes AGI remains contentious, with different researchers offering competing definitions. DeepMind's skepticism about LLM-only approaches doesn't mean the company has solved AGI—it means the path forward requires rethinking fundamental assumptions about how intelligence emerges.

The real story here isn't that one approach is winning. It's that the AI industry is fragmenting into competing visions of what AGI actually requires. DeepMind's emphasis on world models represents a serious challenge to the scaling narrative that has dominated the past three years.