The AI Arms Race in Job Interviews: Challenges and Responses

AI is reshaping job interviews, with candidates using it to cheat and employers fighting back. Trust is eroding, prompting a shift back to in-person interviews.

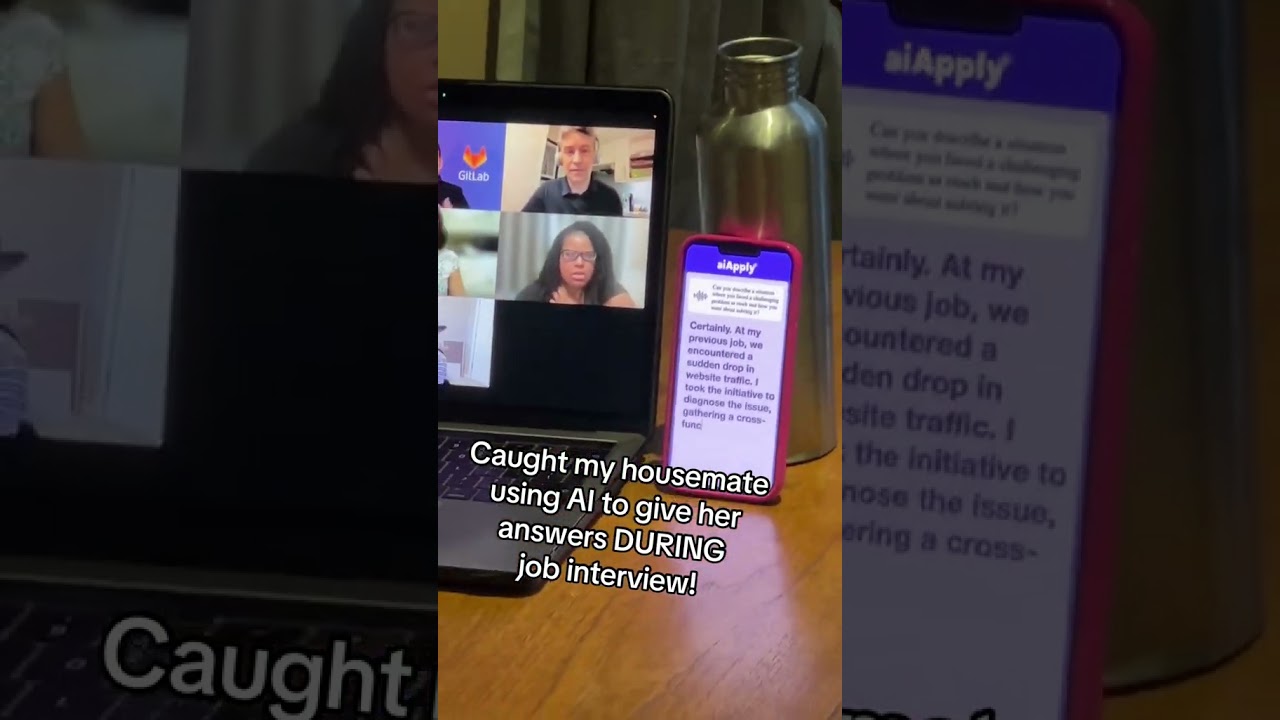

AI Cheating in Job Interviews: The New Arms Race in Hiring

A silent revolution is unfolding in the job market as candidates increasingly turn to artificial intelligence to cheat during interviews, prompting a dramatic response from employers scrambling to maintain the integrity of their hiring processes. What began as a trickle of remote interview fraud has surged into a flood, with companies reporting a 347% increase in incidents since 2020. Candidates now use AI not just to polish resumes but to answer technical questions in real time, manipulate video feeds, and even impersonate others through deepfake technology. The result is a hiring landscape where trust is eroding, and the line between human and machine performance is blurring.

How AI Cheating Works

Modern job seekers have access to an array of AI-powered tools designed to boost their interview performance. These range from chatbots that provide real-time answers to coding questions, to “candidate enhancement” software that generates polished responses for behavioral interviews. In more extreme cases, candidates use video manipulation or deepfake technology to appear as someone else entirely during remote interviews. Such tools are widely available, with vendors actively marketing strategies for leveraging AI throughout the hiring process.

- About a third of FAANG interviewers report having caught candidates cheating.

- 81% suspect that AI-assisted cheating is occurring.

- One in five candidates now use AI to inflate their interview scores.

Industry Response: Detection, Prevention, and a Return to In-Person Interviews

Employers are fighting back with a mix of technology and policy changes. Meta, for example, has made cheating detection a priority, requiring candidates to share their entire screen, disable background filters, and justify any suspicion of dishonesty. Leading technical interview platforms like CoderPad now enable cheating detection by default, monitoring for suspicious activity such as copy-pasting or leaving the interview browser tab. New tools like Sherlock AI claim to detect most forms of cheating with 92% accuracy, far surpassing traditional proctoring methods.

Despite these advances, only 11% of FAANG companies currently use dedicated cheating detection software, suggesting that most organizations remain vulnerable. In response, many are reconsidering the role of remote interviews altogether. A Gartner survey found that 72.4% of recruiting leaders now favor in-person interviews to combat fraud, with major firms like Google, Cisco, and McKinsey reintroducing face-to-face meetings as a verification step.

The Human Cost: Eroding Trust and Dehumanizing Hiring

The rise of AI cheating is not just a technical challenge—it’s a cultural one. HR teams report growing difficulty in distinguishing genuine candidates from those reading scripted AI responses. The consequence is a hiring process that feels increasingly transactional and distrustful, with both candidates and employers frustrated by the arms-race dynamic. Even candidates with substantial experience find themselves rejected by AI-driven interview systems that fail to capture their true capabilities.

The ethical barriers to using AI in interviews have also collapsed. What was once seen as dishonest is now often viewed as a competitive necessity, with vendors openly coaching candidates on how to game the system. This shift has led to a paradox: the same technology that promises to streamline hiring is undermining its fairness and reliability.

Broader Implications and the Path Forward

The implications of widespread AI cheating extend beyond individual hiring decisions. Companies that fail to adapt risk hiring candidates whose interview performance does not reflect their actual abilities, leading to retention and productivity issues down the line. There is also a growing recognition that the skills valued in an AI-saturated interview environment may not align with those needed on the job, further complicating talent assessment.

Industry leaders are now exploring hybrid approaches that combine the efficiency of AI screening with the authenticity of human interaction. Some advocate for structured, panel-based interviews where AI assists but does not replace human judgment. Others emphasize the need for continuous innovation in fraud detection, as the sophistication of cheating tools continues to evolve.

Conclusion

The use of AI to cheat in job interviews represents a profound challenge to the future of hiring. As candidates and employers engage in a technological arms race, the fundamental trust underpinning the employment relationship is at risk. Companies that wish to stay ahead must invest in advanced detection tools, reconsider the balance between remote and in-person interviews, and foster a hiring culture that values authenticity as much as efficiency. In an age of artificial noise, the human signal has never been more valuable—or harder to find.