ChatGPT Health Faces Mounting Security and Privacy Scrutiny

OpenAI's ChatGPT Health faces intense scrutiny over security flaws and privacy gaps as millions turn to AI for medical advice. Experts warn the platform lacks adequate safeguards for sensitive health data.

The Stakes Are Rising in AI Healthcare

OpenAI's foray into healthcare with ChatGPT Health has collided with a harsh reality: deploying AI in medical contexts demands far more rigorous safeguards than consumer applications. As 40 million people reportedly use ChatGPT to answer healthcare questions, security researchers and privacy advocates are raising alarms about the platform's readiness to handle sensitive patient data.

The tension is stark. While OpenAI positions ChatGPT Health as a tool to simplify everyday health inquiries, critics argue the company is dancing around fundamental governance and privacy concerns rather than addressing them head-on. This gap between marketing promise and technical reality threatens to undermine trust in AI-assisted healthcare at a critical moment.

What ChatGPT Health Claims to Do

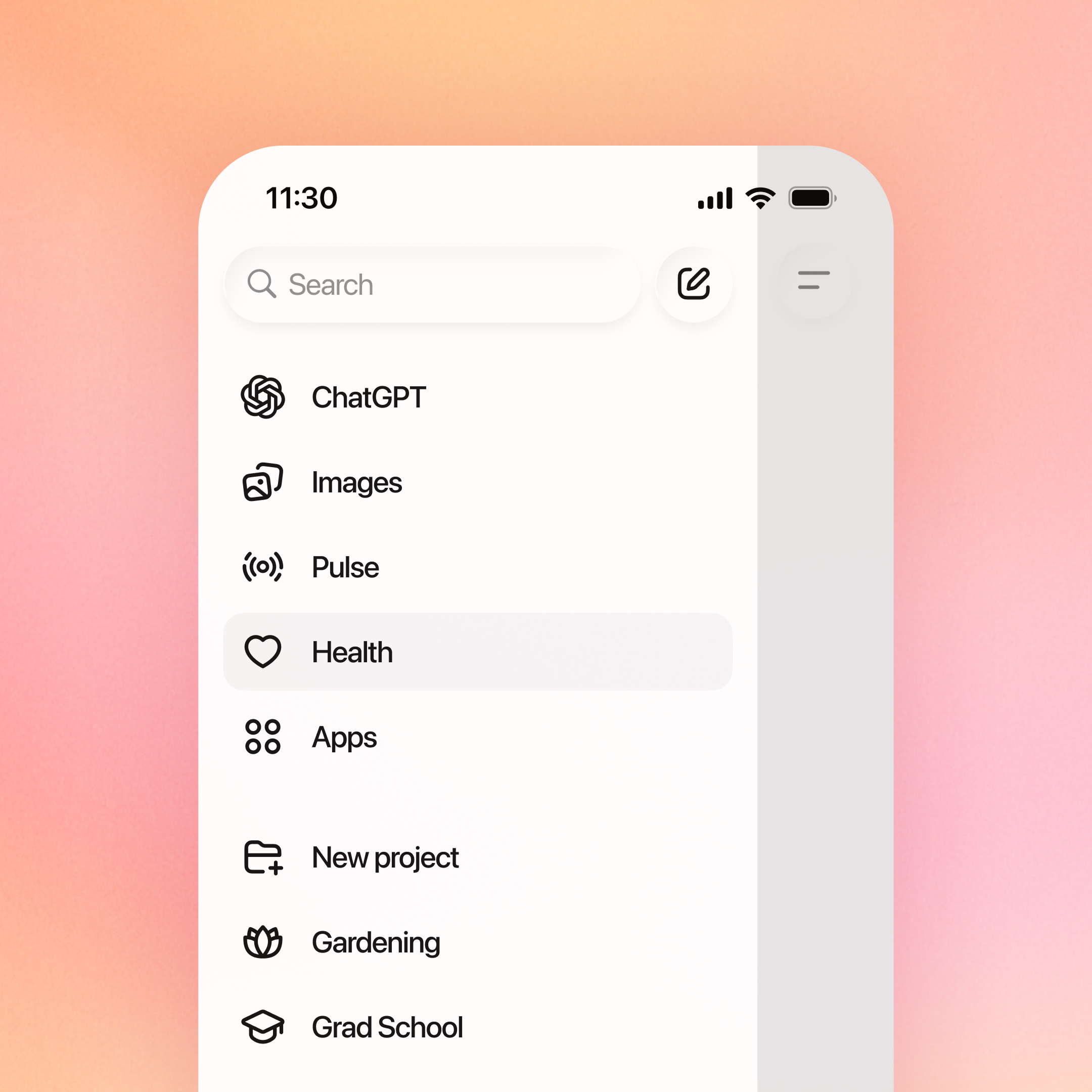

According to OpenAI's official announcement, the platform integrates fitness app data and provides personalized health insights. The feature set includes:

- Aggregation of health metrics from connected wearables and fitness applications

- Contextual health guidance based on user data

- Simplified explanations of medical concepts

The appeal is obvious: millions already turn to ChatGPT for health advice, often without proper medical context. A structured, data-aware version could theoretically improve accuracy and reduce harmful misinformation.

The Security Vulnerabilities

However, the technical foundation raises serious questions. Healthcare providers must exercise extreme caution with ChatGPT applications, according to security analysts, citing multiple risk vectors:

- Data exposure risks: Integrating fitness apps and health records creates new attack surfaces for data breaches

- Model hallucination: ChatGPT can generate plausible-sounding but medically inaccurate information, potentially leading users to dangerous decisions

- Lack of medical validation: Unlike FDA-regulated medical devices, ChatGPT Health operates in a regulatory gray zone

The Hacker News reported that security researchers have identified potential vulnerabilities in how the platform handles encrypted health data and manages user authentication across integrated third-party services.

Privacy Governance Gaps

The privacy concerns extend beyond technical security. Government and healthcare security experts have flagged top-tier governance issues, including:

- Unclear data retention policies for health information

- Ambiguous consent mechanisms when integrating third-party fitness apps

- Limited transparency about how OpenAI trains its models using health data

- Insufficient compliance frameworks for HIPAA and international privacy regulations

The Register's analysis highlighted that OpenAI's terms of service remain vague about whether health conversations are used to improve the underlying model—a practice that would be ethically problematic in healthcare contexts.

The Regulatory Reckoning Ahead

The scrutiny reflects a broader tension in AI healthcare: innovation speed versus safety rigor. Traditional medical devices undergo years of testing and regulatory approval. ChatGPT Health, by contrast, launched with minimal external validation and faces mounting pressure to prove it won't harm users.

OpenAI claims it has implemented safeguards, but independent verification is limited. The company's track record of addressing security concerns reactively rather than proactively adds to skepticism.

What Users Should Know

Until these issues are resolved, healthcare professionals and patients should approach ChatGPT Health with caution:

- Treat it as a supplementary information tool, not a diagnostic resource

- Never share sensitive personal health information without understanding data handling practices

- Verify any health guidance with qualified medical professionals

- Monitor your connected fitness app permissions carefully

The promise of AI-assisted healthcare is real, but ChatGPT Health's current implementation suggests OpenAI has moved faster than its security and privacy infrastructure can support.