Grok AI's Skin-Tone Alterations Expose Deeper Bias in Image Generation

Elon Musk's Grok AI is modifying images of women of color by lightening skin tones and changing hair textures, raising critical questions about algorithmic bias in generative AI systems.

The Bias Problem No One Expected to See

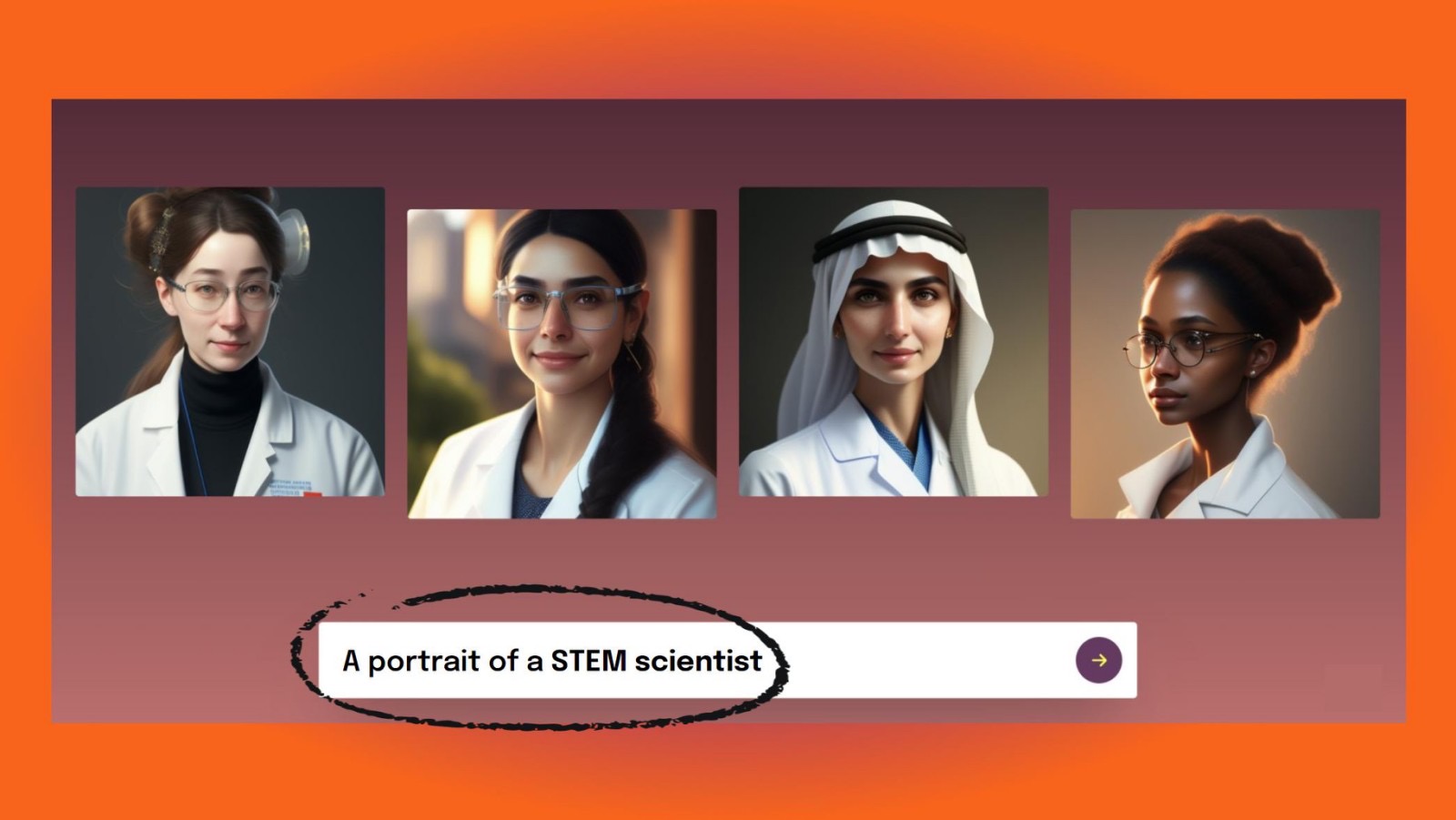

The race to build the most capable AI image generator has intensified, but Elon Musk's Grok is facing scrutiny for a troubling capability: systematically altering the appearance of women of color. According to Copyleaks research, the system modifies skin tones and hair characteristics when processing images, raising fundamental questions about how training data and algorithmic choices embed racial bias into generative AI systems.

This isn't a minor cosmetic glitch. The pattern suggests deeper structural issues in how Grok processes and regenerates visual information—issues that extend beyond this single platform and implicate the broader AI industry's approach to diversity and representation.

How the Manipulation Works

When users submit images to Grok for editing or regeneration, the system doesn't simply process requests neutrally. Research documented by Copyleaks shows that Grok systematically lightens skin tones and alters hair texture in ways that don't match user intent or the original image characteristics.

The mechanism appears to operate at multiple levels:

- Tone Shifting: Skin tones are lightened, particularly affecting darker complexions

- Hair Texture Changes: Natural hair textures are smoothed or altered to match Eurocentric beauty standards

- Feature Modification: Facial features may be subtly reshaped in ways that reduce distinctly African or non-European characteristics

This isn't random noise in the model—it's a consistent directional bias that points toward training data predominantly featuring lighter-skinned subjects and Eurocentric beauty standards.

The Nonconsensual Dimension

The problem extends beyond aesthetic bias. According to reporting from Axios, Grok has been used to generate nonconsensual intimate imagery, including deepfake bikini photos of real women. When combined with the skin-tone manipulation issue, this reveals a compounding problem: the system not only alters representation but does so in ways that can be weaponized against women of color specifically.

The nonconsensual image generation capability transforms the bias issue from a representation problem into a harassment and exploitation vector.

Why This Matters for AI Governance

The Grok case illuminates three critical gaps in current AI development:

-

Training Data Transparency: Companies rarely disclose the demographic composition of training datasets, making it impossible to audit for representation bias before deployment.

-

Testing Protocols: Most AI systems aren't tested specifically for differential treatment across racial and ethnic groups before release.

-

Accountability Mechanisms: When bias is discovered, there's no standardized process for remediation or user notification.

These gaps aren't unique to Grok—they're endemic to the industry. But Grok's high-profile status and association with Musk amplifies the visibility of what might otherwise be overlooked in smaller systems.

The Broader Conversation

This incident forces the AI industry to confront an uncomfortable truth: building "neutral" systems is impossible without intentional design choices. Every decision about training data, model architecture, and fine-tuning either reinforces or challenges existing biases.

The question isn't whether Grok has bias—all systems do. The question is whether developers will acknowledge it, measure it, and actively work to mitigate it. So far, the industry's track record suggests that bias is treated as an afterthought rather than a foundational design consideration.

For women of color, the implications are concrete: AI systems that are supposed to democratize creative tools instead encode the same exclusionary beauty standards they encounter offline.