ChatGPT's Safety Vulnerabilities: What Regulators Are Finding About Teen User Protection

OpenAI faces mounting scrutiny over potential safety gaps in ChatGPT's protections for teenage users, raising questions about content moderation, age verification, and responsible AI deployment in an era of widespread youth adoption.

ChatGPT's Safety Vulnerabilities: What Regulators Are Finding About Teen User Protection

OpenAI's ChatGPT has become one of the most widely adopted AI tools globally, with millions of users accessing the platform daily. Yet emerging concerns about the platform's safety mechanisms—particularly regarding teenage users—are prompting regulatory bodies and researchers to examine potential gaps in content moderation, age verification, and protective guardrails.

The Core Safety Concerns

Recent scrutiny has centered on several critical areas where ChatGPT's safety architecture may fall short for younger users:

- Inadequate age verification mechanisms that fail to reliably confirm user age before access

- Content moderation gaps that allow the model to generate potentially harmful responses without sufficient filtering

- Insufficient safeguards against manipulation by users seeking to bypass existing restrictions

- Limited transparency regarding how the platform handles data from underage users

The concern extends beyond simple content filtering. Researchers and regulators worry that ChatGPT's conversational nature—designed to be helpful, harmless, and honest—may inadvertently provide teenagers with information on sensitive topics including self-harm, substance abuse, or other dangerous behaviors without appropriate context or warnings.

How ChatGPT's Architecture Affects Safety

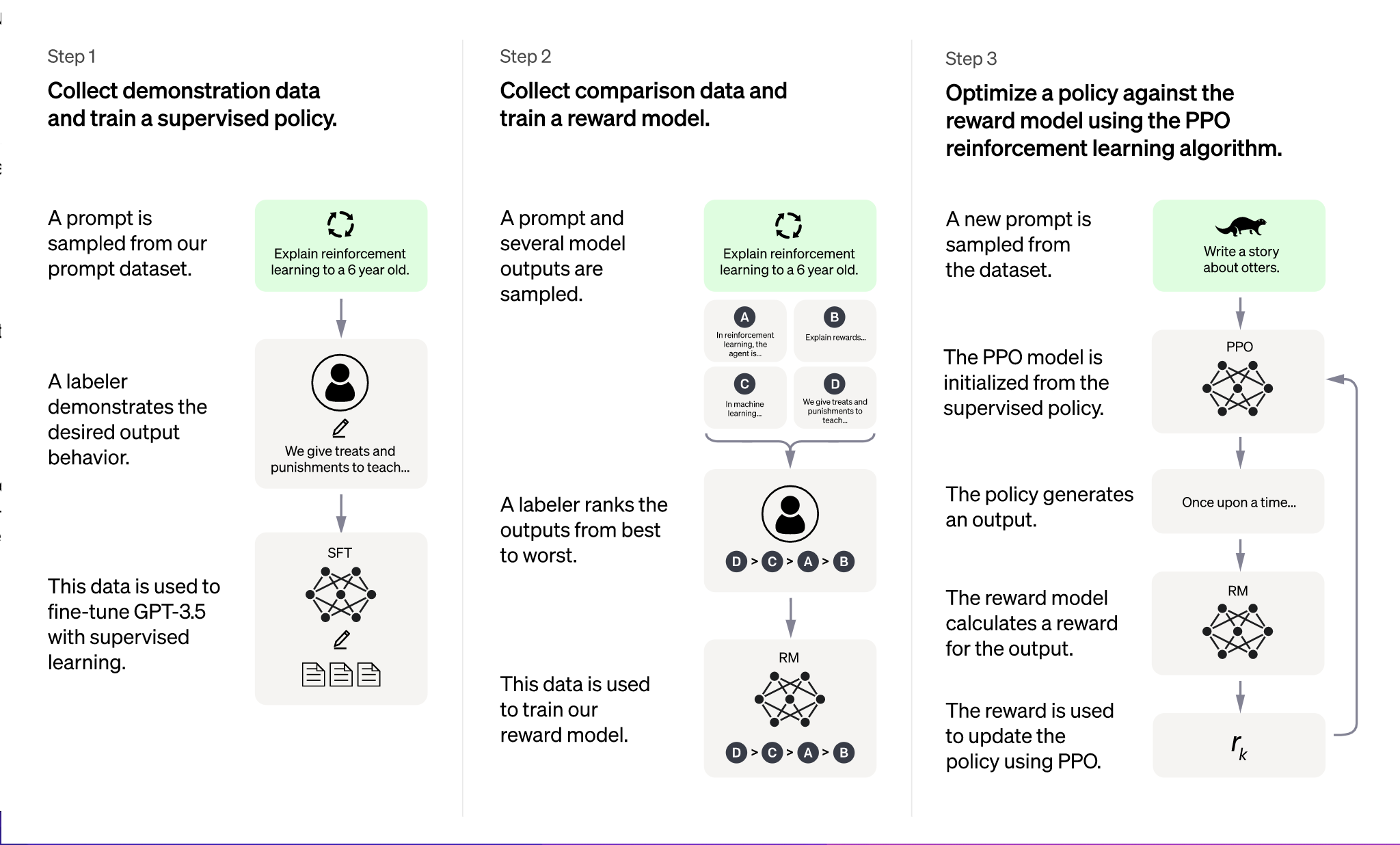

ChatGPT operates through a large language model trained on vast datasets, then fine-tuned using reinforcement learning from human feedback (RLHF). This architecture, while powerful for generating coherent responses, creates inherent challenges for age-appropriate content delivery. The model doesn't natively understand user age or developmental stage, relying instead on post-hoc filtering mechanisms that can be inconsistent or circumvented.

OpenAI has implemented safety measures including:

- Content policy enforcement at the API level

- Automated detection systems for policy violations

- User reporting mechanisms for harmful outputs

However, these measures operate at the system level rather than the model level, meaning they function as barriers rather than fundamental design features. This distinction matters significantly when considering teenage users who may be more sophisticated in finding workarounds.

Regulatory and Industry Response

The scrutiny reflects broader concerns about AI safety in consumer-facing applications. Regulators in the European Union, United Kingdom, and United States have begun examining whether platforms hosting generative AI tools adequately protect minors. The EU's Digital Services Act, for instance, imposes specific requirements for protecting minors from harmful content and manipulative design patterns.

Industry observers note that OpenAI's terms of service technically restrict ChatGPT use to users 13 and older (with parental consent), yet enforcement mechanisms remain weak. The platform relies primarily on user self-certification rather than robust verification systems.

Path Forward: What Needs to Change

Addressing these gaps will likely require:

Technical improvements: Enhanced content filtering specifically calibrated for different age groups, with clearer warnings about sensitive topics.

Verification systems: Stronger age verification mechanisms, potentially integrated with identity verification services.

Transparency measures: Clearer disclosure to users and parents about how ChatGPT handles requests from teenagers and what safeguards exist.

Ongoing monitoring: Regular third-party audits of safety mechanisms and their effectiveness across different user demographics.

Key Takeaway

The tension between ChatGPT's utility and its safety for teenage users reflects a broader challenge in AI deployment: powerful tools designed for general audiences may require specialized safeguards when accessed by vulnerable populations. OpenAI's response to these concerns will likely set precedent for how other AI platforms approach youth safety in the coming years.

Key Sources: OpenAI's official safety documentation and policy frameworks; regulatory guidance from the EU Digital Services Act; ongoing research from AI safety organizations examining large language model vulnerabilities.